- Science News

- Open science and peer review

- Peer-reviewing Frontiers

Peer-reviewing Frontiers

Surveys among thousands of our authors, editors, reviewers show high overall satisfaction with Frontiers, but also yield constructive criticisms which help us to improve our processes and platform.

Frontiers is determined to let researchers shape scholarly publishing. It is therefore important that we listen to you, the researchers. Send us your suggestions, your criticisms, your requests – we welcome them. We don’t claim to be perfect, but we are constantly evolving, based on feedback from the scientific community. It is particularly easy for Frontiers to make improvements, because we are the first and only publisher to have built our IT platform in-house and from scratch, ensuring maximum flexibility of our system and processes.

Every day, Frontiers actively looks for such feedback, for example through conference calls and meetings with our editorial boards, through discussions with researchers at conferences around the world, and by tracking comments on social media. Another way is through continuous user satisfaction surveys.

Here, we give a summary of results from two such surveys, with a combined response of over 11,000 Frontiers authors, editors, and reviewers. These surveys point to considerable satisfaction with Frontiers, but also yield constructive criticisms which allow us to improve further.

The surveys in brief

First, between January 2013 and December 2015, immediately after submission, all submitting authors were invited to fill out a survey about their satisfaction with Frontiers’ submission process. This “post-submission survey” was completed by 8031 submitting authors – at a tremendous response rate of 20.7%.

Second, between January 2014 and December 2015, upon publication of each article, all corresponding authors, handling editors, and reviewers were invited to fill out a survey about their satisfaction with Frontiers’ collaborative review process, Frontiers’ interactive review forum, and the support received from Frontiers staff. This “post-publication survey” was completed by 1992 corresponding authors (5.1% response rate), 217 editors (1.7%), and 813 reviewers (3.1%).

In both surveys, the questions were multiple-choice, with answers on different 5- or 6-point Likert-type scales. Participation in the surveys was always voluntary and anonymous. For the sake of brevity, only the responses to 7 comprehensive questions from the surveys are shown here (Figs. 1-7), but the other questions all yielded similar results. Naturally, we warmly thank all participants for their valuable feedback.

Feedback on Frontiers’ submission process

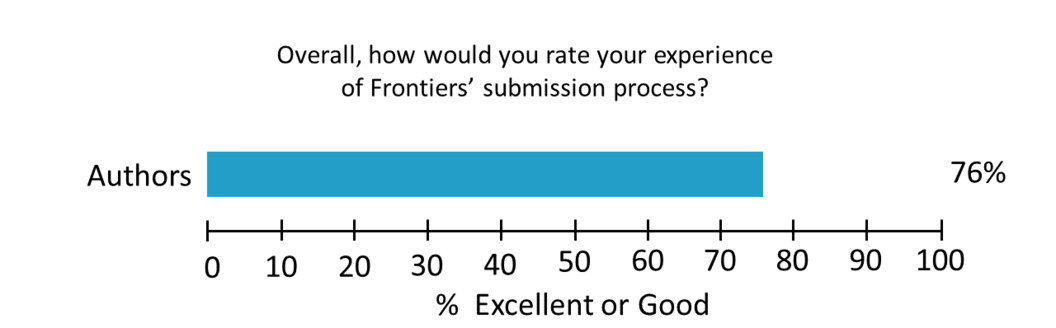

In the post-submission survey, 80% of respondents rated Frontiers’ submission process as “Excellent” or “Good” overall (Fig. 1).

Fig 1. The percentage of respondents to the post-submission survey who rated Frontiers’ overall submission process as either “Excellent” or “Good”. Answers were on a Likert-style scale: “Excellent”, “Good”, “Average”; “Below average”; “Poor”; “I don’t know”.

Praise by anonymous authors for the submission process included:

“This was the easiest and most pleasant experience I ever had submitting a manuscript.“

“Excellent submission portal. Best I’ve used.”

“The easiest, and fastest submission system I have ever used.”

However, responses to the survey also show that there is scope to improve our submission system further. We received many welcome suggestions, for example to support LibreOffice and Open Office documents; to make the submission of figures and supplementary materials easier and more flexible; to make the submission process faster; to simplify the process for adding authors and affiliations; and to improve navigation of the submission interface. Frontiers looks forward to implementing these improvements whenever possible.

Feedback on Frontiers’ peer-review process and Review Forum

Frontiers’ peer-review process is unique. Our peer-review focuses on objective criteria – the scientific validity and soundness of the submission – and requires consensus of reviewers for acceptance or rejection. It is systematic and rigorous, because reviewers use standardized questionnaires during the first, independent review phase. It is collaborative, with reviewers and editors working together with authors to improve each submission, during the second, interactive review phase. The process is fast and efficient, as it takes place – almost in real time — on the interactive Review Forum, a state-of-the-art IT platform. And it is transparent and accountable, since the names of editors and reviewers are always disclosed upon publication. Moreover, Frontiers tries to appoint only the world’s top researchers to our editorial boards, further strengthening the quality and rigor of the specialist review.

But let’s look at the numbers.

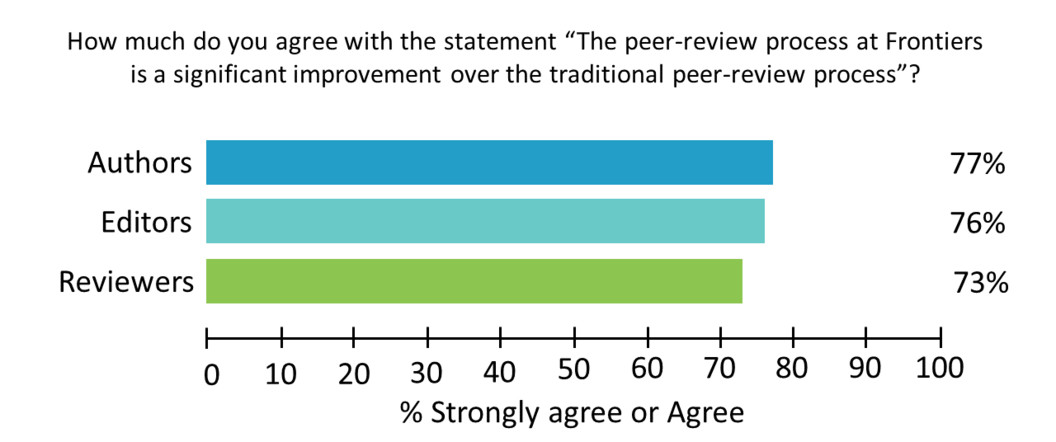

In our post-publication survey, 73-76% of respondents “Strongly agreed” or “Agreed” that Frontiers’ peer-review process is a significant improvement on traditional peer review (Fig. 2), while 86-91% of respondents rated the peer-review process as “Excellent” or “Good” (Fig. 3).

Fig 2. The percentage of respondents to the post-publication survey who either “Strongly agreed” or “Agreed” that Frontiers’ collaborative peer-review process is a significant improvement on traditional peer review. Possible answers were on a Likert-style scale: “Strongly agree”, “Agree”, “Neither agree or disagree”; “Disagree”; “Poor”; “I don’t know”.

Fig 3. The percentage of respondents to the post-publication survey who rated their overall experience of Frontiers’ peer-review process as either “Excellent” or “Good”. Answers were on a Likert-style scale: “Excellent”, “Good”, “Average”; “Below average”; “Poor”; “I don’t know”.

Feedback on our interactive Review Forum was also generally positive: 86-92% of respondents rated the Review Forum as “Excellent” or “Good” (Fig. 4), while 80-85% “Strongly agreed” or “Agreed” that it is fast and efficient (Fig. 5).

Fig 4. The percentage of respondents to the post-publication survey who rated their overall experience of Frontiers’ interactive Review Forum as either “Excellent” or “Good”. Answers were on a Likert-style scale: “Excellent”, “Good”, “Average”; “Below average”; “Poor”; “I don’t know”.

Fig 5. The percentage of respondents to the post-publication survey who “Strongly agreed” or “Agreed” that Frontiers’ interactive Review Forum is fast and efficient. Answers were on a Likert-style scale: “Excellent”, “Good”, “Average”; “Below average”; “Poor”; “I don’t know”.

Praise for the review process and Review Forum included:

“The reviewing process was very constructive and fair. It improved the manuscript substantially. Way to go.” [anonymous author]

“The ultimate identification of the reviewers ensures that review comments are respectful as well as helpful, which encourages a constructive review and potential network building between reviewer and authors.” [anonymous editor]

“Great turn-around time and convenient on-line process. The review questionnaire was helpful in focusing my thoughts and comments.” [anonymous reviewer]

“I enjoy reviewing manuscripts for publication in Frontiers. They attract very interesting, high-quality submissions. The review process is accessible and efficient.” [anonymous reviewer]

“I find the Frontiers platform to be excellent to foster transparency and solid scientific dialogue between authors, reviewers and editors. (…) The interface for submitting and receiving comments is extremely easy to use.” [anonymous reviewer]

We also received valuable suggestions on how to improve the peer-review process and Review Forum further. For example, a few authors, editors, and reviewers asked that the review process be double-blind, with author names disclosed upon acceptance; that there be a maximum number of interactions on the Review Forum, to keep the interactive review phase as fast and efficient as possible; that reviewers and authors be able to upload figures to the Review Forum, to illustrate complex arguments; or that the deadlines for submitting review reports be more generous, and displayed more clearly. We are currently considering these proposed changes.

The more frequent suggestions have already been addressed. For example, many reviewers asked for a simpler interface for advising rejection. Certainly, Frontiers has always encouraged rejections of manuscripts with objective errors, ethical problems, or poor presentation, or whose authors do not sufficiently revise their manuscript to address the editors’ and reviewers’ concerns. Previously, reviewers could advise rejection by officially withdrawing from the review. But since January 2016, reviewers can use a prominent “Withdraw from review / recommend rejection” button on the Review Forum to do so.

Another recurring suggestion which we have responded to was for an “editor dashboard”, an interface that that shows editors the status of each manuscript, notifying them whenever action is required. We are happy to announce that the editor dashboard will be launched in 2016. More improvements soon!

Feedback on support from Frontiers’ staff

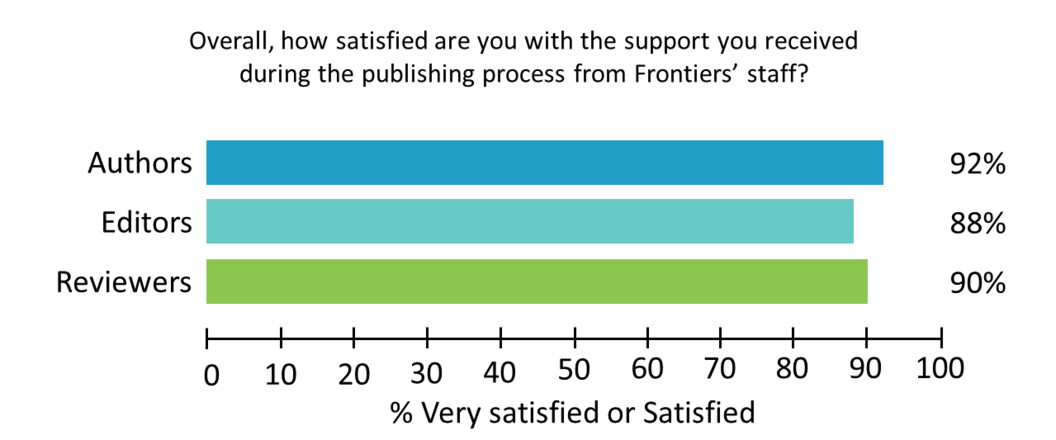

Frontiers has highly qualified (including many PhDs and postdocs) and trained staff in Lausanne, whose role is to assist authors, editors, and reviewers in their tasks, to answer any queries, and to resolve technical difficulties. This seems to be highly appreciated by our users: in the post-publication survey, 88-90% of respondents replied they were “Very satisfied” or “Satisfied” with the support received from Frontiers’ staff (Fig. 6). Furthermore, 83-92% of respondents were “Very satisfied” or “Satisfied” with the way our staff answered their queries, both in terms of speed and quality of information (data not shown). Among authors, 96% were “Very satisfied” or “Satisfied” with the speed with which they received the author proofs (data not shown).

Fig 6. The percentage of respondents to the post-publication survey who rated their overall experience of Frontiers’ online interactive peer-review as either “Excellent” or “Good”. Answers were on a Likert-style scale: “Very satisfied”; “Satisifed” ; “Neither satisfied nor dissatisfied”, “Dissatisfied”, “Very dissatisfied”, “I don’t know.”

Praise for our staff from anonymous authors included:

“Communication and support provided by frontiers and their collaborators/staff was outstanding!”

“The interaction with the staff instilled a high level of confidence in me with regard to the journal.”

“Very, very, very high level of editorial support.”

“The Frontiers team was very supportive and amazingly patient.”

Likelihood of working with Frontiers again

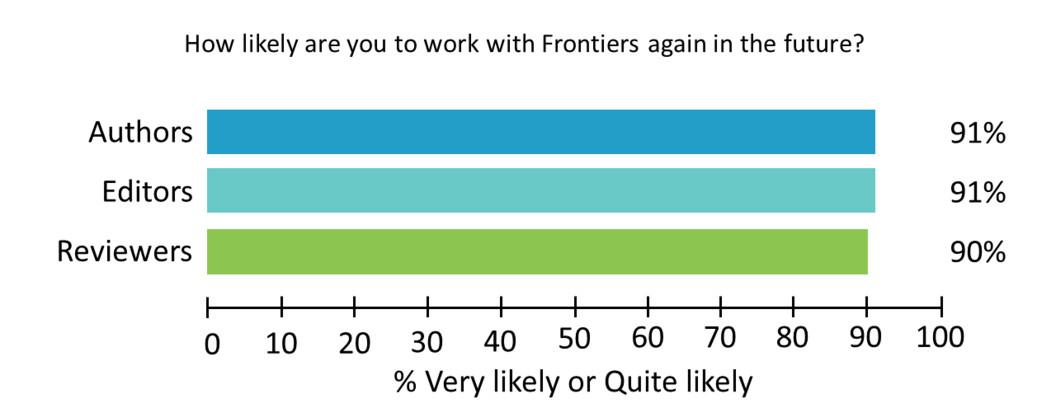

In the post-publication survey, we asked our users how likely they were to submit another manuscript to Frontiers (authors), edit another manuscript (editors), or review another manuscript for Frontiers (reviewers). Between 90-91% rated it “Very likely” or “Quite likely” that they would work with Frontiers again (Fig. 7).

Fig 7. The percentage of respondents to the post-publication survey who rated the likelihood that they would submit, edit, or review another for Frontiers as either “Very likely” or “Quite likely”. Possible answers were on a Likert-style scale: “Very likely”; “Quite likely” ; “Unlikely”, “Very unlikely”, “I don’t know.”

Conclusion

The satisfaction surveys show that most of our authors, editors, and reviewers are overall very satisfied with Frontiers and are eager to work with us again. But we plan to do even better. Thanks to the support and constructive feedback from the scientific community, we continue to perfect our system and processes, in line with our mission “for scientists, by scientists”. It is going to be another exciting year – stay tuned.

If you have feedback on our peer-review process, our Review Forum, our publishing platform, our policies, our services, please write to: editorial.office@frontiersin.org or Tweet to: @FrontiersIn